容器网络通信是一块很大的内容,docker原生的网络模式其实已经很强大了,可以看一下官方文档 !本文只是阐述业务中常用的几种模式!

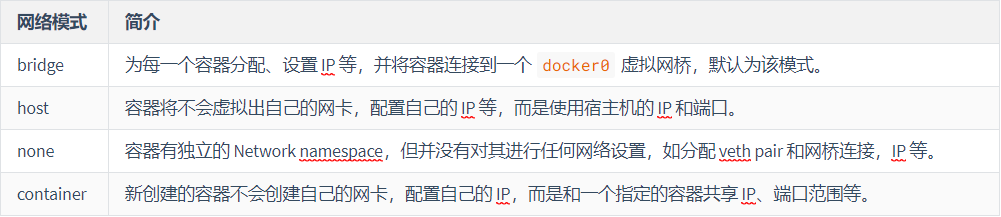

1、bridge模式

在该模式中,Docker 守护进程创建了一个虚拟以太网桥 docker0,新建的容器会自动桥接到这个接口,附加在其上的任何网卡之间都能自动转发数据包。

1 2 3 4 5 6 7 [root@centos-linux ~]# ip addr show docker0 3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:dd:54:2a:d4 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:ddff:fe54:2ad4/64 scope link valid_lft forever preferred_lft forever

默认情况下,守护进程会创建一一对等虚拟设备接口 veth pair,将其中一个接口设置为容器的 eth0 接口(容器的网卡),另一个接口放置在宿主机的命名空间中,以类似 vethxxx 这样的名字命名,从而将宿主机上的所有容器都连接到这个内部网络上。

首先启动一个容器,可以看到它的网卡,eth0

1 2 3 4 5 6 7 8 9 10 [fanhaodong@centos-linux ~]$ docker run --rm -it busybox /bin/sh / # ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 6: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever

然后再看宿主机,由于mac的网络模型和linux发行版有区别,所以使用的centos7的CentOS Linux release 7.9.2009

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 [root@centos-linux ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:1c:42:b8:a6:b2 brd ff:ff:ff:ff:ff:ff inet 192.168.56.3/24 brd 192.168.56.255 scope global noprefixroute dynamic eth0 valid_lft 1769sec preferred_lft 1769sec inet6 fdb2:2c26:f4e4:0:21c:42ff:feb8:a6b2/64 scope global noprefixroute dynamic valid_lft 2591754sec preferred_lft 604554sec inet6 fe80::21c:42ff:feb8:a6b2/64 scope link noprefixroute valid_lft forever preferred_lft forever 3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:dd:54:2a:d4 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:ddff:fe54:2ad4/64 scope link valid_lft forever preferred_lft forever 7: veth132f35c@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default link/ether 0e:5e:61:09:28:7c brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet6 fe80::c5e:61ff:fe09:287c/64 scope link valid_lft forever preferred_lft forever

可以看到本地网络多了一个网卡7: veth132f35c@if6, 通过以上的比较可以发现,证实了之前所说的:守护进程会创建一对对等虚拟设备接口 veth pair,将其中一个接口设置为容器的 eth0 接口(容器的网卡),另一个接口放置在宿主机的命名空间中,以类似 vethxxx 这样的名字命名。 同时,守护进程还会从网桥 docker0 的私有地址空间中分配一个 IP 地址和子网给该容器,并设置 docker0 的 IP 地址为容器的默认网关。也可以安装 yum install -y bridge-utils 以后,通过 brctl show 命令查看网桥信息。 1 2 3 [fanhaodong@centos-linux ~]$ brctl show bridge name bridge id STP enabled interfaces docker0 8000.0242dd542ad4 no veth132f35c

可以看到 interfaces有一个叫做 veth132f35c

可以通过 docker network inspect bridge 查看模式

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 [root@centos-linux ~]# docker network inspect bridge [ { "Name": "bridge", "Id": "50e703932ca8d619016f1e08df21269ee09b3f9799c81f47e5df6e477ee3c341", "Created": "2021-02-03T11:22:58.527865244+08:00", "Scope": "local", "Driver": "bridge", "EnableIPv6": false, "IPAM": { "Driver": "default", "Options": null, "Config": [ { "Subnet": "172.17.0.0/16", "Gateway": "172.17.0.1" } ] }, "Internal": false, "Attachable": false, "Ingress": false, "ConfigFrom": { "Network": "" }, "ConfigOnly": false, "Containers": { "66949d48a6c0d1629240ab01c2601fd1c885e66b8e75b254b2e5537e7414914e": { "Name": "modest_tesla", "EndpointID": "1012aaac090fa8dac5fababa3467338a255f867b581a97539abb67477da3036a", "MacAddress": "02:42:ac:11:00:02", "IPv4Address": "172.17.0.2/16", "IPv6Address": "" } }, "Options": { "com.docker.network.bridge.default_bridge": "true", "com.docker.network.bridge.enable_icc": "true", "com.docker.network.bridge.enable_ip_masquerade": "true", "com.docker.network.bridge.host_binding_ipv4": "0.0.0.0", "com.docker.network.bridge.name": "docker0", "com.docker.network.driver.mtu": "1500" }, "Labels": {} } ]

可以看到 容器名称一样,

1 2 3 [root@centos-linux ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 66949d48a6c0 busybox "/bin/sh" 2 minutes ago Up 2 minutes modest_tesla

查看 network, docker inspect 66949d48a6c0 -f '{{json .NetworkSettings.Networks.bridge}}'

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 [root@centos-linux ~]# docker inspect 66949d48a6c0 -f '{{json .NetworkSettings.Networks.bridge}}' { "IPAMConfig": null, "Links": null, "Aliases": null, "NetworkID": "50e703932ca8d619016f1e08df21269ee09b3f9799c81f47e5df6e477ee3c341", "EndpointID": "1012aaac090fa8dac5fababa3467338a255f867b581a97539abb67477da3036a", "Gateway": "172.17.0.1", "IPAddress": "172.17.0.2", "IPPrefixLen": 16, "IPv6Gateway": "", "GlobalIPv6Address": "", "GlobalIPv6PrefixLen": 0, "MacAddress": "02:42:ac:11:00:02", "DriverOpts": null }

注意:

网桥模式下,各个容器是可以互相ping通的,可以通过主机IP相互PING通(默认的不支持主机名相互ping通)

2、host 网络模式 这个比较适合用于本地软件安装,单软件,类似于启动一个程序,但是需要环境依赖,可以使用这个

host 网络模式需要在创建容器时通过参数 --net host 或者 --network host 指定; 采用 host 网络模式的 Docker Container,可以直接使用宿主机的 IP 地址与外界进行通信,若宿主机的 eth0 是一个公有 IP,那么容器也拥有这个公有 IP。同时容器内服务的端口也可以使用宿主机的端口,无需额外进行 NAT 转换; host 网络模式可以让容器共享宿主机网络栈,这样的好处是外部主机与容器直接通信,但是容器的网络缺少隔离性。 host 模式不能访问外部网络,比如ping www.baidu.com是行不通的 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 [root@centos-linux .ssh]# docker run --rm -it --network host busybox ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast qlen 1000 link/ether 00:1c:42:b8:a6:b2 brd ff:ff:ff:ff:ff:ff inet 192.168.56.3/24 brd 192.168.56.255 scope global dynamic eth0 valid_lft 1128sec preferred_lft 1128sec inet6 fdb2:2c26:f4e4:0:21c:42ff:feb8:a6b2/64 scope global dynamic valid_lft 2591996sec preferred_lft 604796sec inet6 fe80::21c:42ff:feb8:a6b2/64 scope link valid_lft forever preferred_lft forever 3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue link/ether 02:42:dd:54:2a:d4 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:ddff:fe54:2ad4/64 scope link valid_lft forever preferred_lft forever

3、none 网络模式 none 网络模式是指禁用网络功能,只有 lo 接口 local 的简写,代表 127.0.0.1,即 localhost 本地环回接口。在创建容器时通过参数 --net none 或者 --network none 指定; none 网络模式即不为 Docker Container 创建任何的网络环境,容器内部就只能使用 loopback 网络设备,不会再有其他的网络资源。可以说 none 模式为 Docke Container 做了极少的网络设定,但是俗话说得好“少即是多”,在没有网络配置的情况下,作为 Docker 开发者,才能在这基础做其他无限多可能的网络定制开发。这也恰巧体现了 Docker 设计理念的开放 。 1 2 3 4 5 [root@centos-linux .ssh]# docker run --rm -it --network none busybox ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever

4、container 网络模式 Container 网络模式是 Docker 中一种较为特别的网络的模式。在创建容器时通过参数 --net container:已运行的容器名称/ID 或者 --network container:已运行的容器名称/ID 指定; 处于这个模式下的 Docker 容器会共享一个网络栈,这样两个容器之间可以使用 localhost 高效快速通信。 1 2 3 4 5 6 7 8 9 10 [root@centos-linux .ssh]# docker run --rm -it --name b1 busybox /bin/sh / # ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 28: eth0@if29: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever

主机2

1 2 3 4 5 6 7 8 9 [root@centos-linux ~]# docker run --rm -it --name b2 --network container:b1 busybox ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 28: eth0@if29: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever

假如此时 主机b1死机了,其实它的网卡也只剩下lo网卡

b2 主机当 b1 挂掉后会如图所示,当b1重启,b2也需要重启才能看到网卡信息

1 2 3 4 5 6 / # ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever / #

5、自定义网络 1、自定义创建一个网络,默认是bridge模式!

1 2 [root@centos-linux /]# docker network create custom_network 94a35aa31bdee048a0e5d8b178e4246b429d4525a4036d3d9287650933357c6e

2、查看网络

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 [root@centos-linux /]# docker network inspect custom_network [ { "Name": "custom_network", "Id": "94a35aa31bdee048a0e5d8b178e4246b429d4525a4036d3d9287650933357c6e", "Created": "2021-03-26T14:35:27.907496817+08:00", "Scope": "local", "Driver": "bridge", "EnableIPv6": false, "IPAM": { "Driver": "default", "Options": {}, "Config": [ { "Subnet": "172.18.0.0/16", "Gateway": "172.18.0.1" } ] }, "Internal": false, "Attachable": false, "Ingress": false, "ConfigFrom": { "Network": "" }, "ConfigOnly": false, "Containers": {}, "Options": {}, "Labels": {} } ]

3、使用 custom网络

1 2 3 4 [root@centos-linux /]# docker run --rm -d --name demo-1 --network custom_network alpine top 93e04b37b6a7418131a1ff2ef21d45c807757746841a601a1438ffa5c6fa1061 [root@centos-linux /]# docker run --rm -d --name demo-2 --network custom_network alpine top be07cf3872c42a46dc564fa8f20dee696301e8cc24dc653ffa71cb52bd4c0de3

4、是否可以相互ping通

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [root@centos-linux /]# docker exec -it demo-1 ping -c 2 demo-2 PING demo-2 (172.18.0.3): 56 data bytes 64 bytes from 172.18.0.3: seq=0 ttl=64 time=0.034 ms 64 bytes from 172.18.0.3: seq=1 ttl=64 time=0.143 ms --- demo-2 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.034/0.088/0.143 ms [root@centos-linux /]# docker exec -it demo-2 ping -c 2 demo-1 PING demo-1 (172.18.0.2): 56 data bytes 64 bytes from 172.18.0.2: seq=0 ttl=64 time=0.041 ms 64 bytes from 172.18.0.2: seq=1 ttl=64 time=0.091 ms --- demo-1 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.041/0.066/0.091 ms

1 2 3 4 5 6 7 8 [root@centos-linux /]# docker exec -it demo-2 ping -c 2 192.168.56.3 PING 192.168.56.3 (192.168.56.3): 56 data bytes 64 bytes from 192.168.56.3: seq=0 ttl=64 time=0.082 ms 64 bytes from 192.168.56.3: seq=1 ttl=64 time=0.121 ms --- 192.168.56.3 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.082/0.101/0.121 ms

6、跨宿主机访问 官方文档 : 某些应用程序,尤其是旧版应用程序或监视网络流量的应用程序,期望直接连接到物理网络。在这种情况下,可以使用macvlan网络驱动程序为每个容器的虚拟网络接口分配MAC地址,使其看起来像是直接连接到物理网络的物理网络接口。

对于SDN来说,一般都是基于overlay模式,尤其是在使用docker中会出现跨主机容器无法通信的问题,docker提供ipvlan技术完全可以解决,但是需要linux内核版本> 4.2,唯一的局限性就是需要自己实现宿主机与容器间通信的问题!

像我们公司就是基于ipvlan实现的跨宿主机通信的问题

1、macvlan 模式 介绍 macvlan 是在docker1.2版本之后推出的,主要是解决:是旧版应用程序或监视网络流量的应用程序,期望直接连接到物理网络(意思就是直接连接到物理网络,可以相互ping通)

在这种情况下,可以使用macvlan网络驱动程序为每个容器的虚拟网络接口分配MAC地址,使其看起来像是直接连接到物理网络的物理网络接口。在这种情况下,您需要在Docker主机上指定用于的物理接口macvlan,以及的子网和网关macvlan。您甚至可以macvlan使用不同的物理网络接口隔离网络。请记住以下几点:

由于IP地址耗尽或“ VLAN传播”,很容易无意间损坏您的网络,在这种情况下,您的网络中有大量不正确的唯一MAC地址。 您的网络设备需要能够处理“混杂模式”,在该模式下,可以为一个物理接口分配多个MAC地址。 如果您的应用程序可以使用网桥(在单个Docker主机上)或覆盖(跨多个Docker主机进行通信)工作,那么从长远来看,这些解决方案可能会更好。 使用 1、我的主机ip

1 2 3 4 5 6 7 8 9 [root@centos-linux ~]# ip addr show eth0 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:1c:42:b8:a6:b2 brd ff:ff:ff:ff:ff:ff inet 192.168.56.3/24 brd 192.168.56.255 scope global noprefixroute dynamic eth0 valid_lft 1098sec preferred_lft 1098sec inet6 fdb2:2c26:f4e4:0:21c:42ff:feb8:a6b2/64 scope global noprefixroute dynamic valid_lft 2591546sec preferred_lft 604346sec inet6 fe80::21c:42ff:feb8:a6b2/64 scope link noprefixroute valid_lft forever preferred_lft forever

2、根据主机ip去创建 macvlan

1 2 3 4 5 6 7 [root@centos-linux ~]# docker network create -d macvlan \ --subnet=192.168.56.0/24 \ --gateway=192.168.56.1 \ -o parent=eth0 macvlan_net 3adcc89a20a0a40b55b026ffcb9164990ca8c347d23445f367ed1a88f83ddd57

3、查看docker网络信息

所有网络

1 2 3 4 5 6 [root@centos-linux ~]# docker network list NETWORK ID NAME DRIVER SCOPE 3505ceea1e1b bridge bridge local 892b017dd40d host host local 3adcc89a20a0 macvlan_net macvlan local 69e3ce2179b5 none null local

信息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 [root@centos-linux ~]# docker network inspect macvlan_net [ { "Name": "macvlan_net", "Id": "71e776d96002b0d6977a65b6e370b3d8b71283304239e16144cdf1298f7500cc", "Created": "2021-03-05T19:59:54.375164139+08:00", "Scope": "local", "Driver": "macvlan", "EnableIPv6": false, "IPAM": { "Driver": "default", "Options": {}, "Config": [ { "Subnet": "192.168.56.0/24", "Gateway": "192.168.56.1" } ] }, "Internal": false, "Attachable": false, "Ingress": false, "ConfigFrom": { "Network": "" }, "ConfigOnly": false, "Containers": { "2a2285139cd7fb92a93b437ad6bd00b9b2d80f1918b448f22cadc03a32cc5d12": { "Name": "gallant_nightingale", "EndpointID": "7399fc4d01163d87b0ef078772c0abd3e1dd857eb6bcc692eb06d5cc9767008b", "MacAddress": "02:42:c0:a8:38:20", "IPv4Address": "192.168.56.32/24", "IPv6Address": "" }, "61e4e496c22ab5ca0f4bd7a44fd3c9b996f6ff81a33c6853ac94270608696974": { "Name": "nervous_mendeleev", "EndpointID": "e238ca83dc06eff26cc6f862d431ffac8750815870bfb2eb7fc5a498c9a81cec", "MacAddress": "02:42:c0:a8:38:1f", "IPv4Address": "192.168.56.31/24", "IPv6Address": "" } }, "Options": { "parent": "eth0" }, "Labels": {} } ]

4、创建容器

1)直接启动

可以看到IP是 192.168.56.2 ,属于 192.168.56.3/24 子网下面,由于是第一个,所以第一个容器是顺序创建的,ip就是2 (存在的问题就是可能和宿主机ip相同,这就尴尬了)

容器1

1 2 3 4 5 6 7 8 9 10 [root@centos-linux ~]# docker run --rm -it --net=macvlan_net --ip 192.168.56.31 alpine /bin/sh / # ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 8: eth0@if2: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP link/ether 02:42:c0:a8:38:1f brd ff:ff:ff:ff:ff:ff inet 192.168.56.31/24 brd 192.168.56.255 scope global eth0 valid_lft forever preferred_lft forever

容器2

1 2 3 4 5 6 7 8 9 10 [root@centos-linux ~]# docker run --rm -it --net=macvlan_net --ip 192.168.56.32 alpine /bin/sh / # ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 9: eth0@if2: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP link/ether 02:42:c0:a8:38:20 brd ff:ff:ff:ff:ff:ff inet 192.168.56.32/24 brd 192.168.56.255 scope global eth0 valid_lft forever preferred_lft forever

2)ping实验

1、同一宿主机无法PING通容器:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [root@centos-linux ~]# ping 192.168.56.31 -c 2 PING 192.168.56.31 (192.168.56.31) 56(84) bytes of data. From 192.168.56.3 icmp_seq=1 Destination Host Unreachable From 192.168.56.3 icmp_seq=2 Destination Host Unreachable --- 192.168.56.31 ping statistics --- 2 packets transmitted, 0 received, +2 errors, 100% packet loss, time 1002ms pipe 2 [root@centos-linux ~]# ping 192.168.56.32 -c 2 PING 192.168.56.32 (192.168.56.32) 56(84) bytes of data. ^[From 192.168.56.3 icmp_seq=1 Destination Host Unreachable From 192.168.56.3 icmp_seq=2 Destination Host Unreachable --- 192.168.56.32 ping statistics --- 2 packets transmitted, 0 received, +2 errors, 100% packet loss, time 1007ms pipe 2

关于: Destination Host Unreachable ,可以看一下这篇文章:https://www.eefocus.com/communication/426853 ,问题就是 局域网中无法找到对应 IP 的 MAC 地址,无法完成封装,所以可以看到docker的实现其实就是做了一层mac地址的转换

1 2 3 4 [root@centos-linux ~]# ip route get 192.168.56.32 192.168.56.32 dev eth0 src 192.168.56.3 uid 0 cache # 主要走的是通过 eth0

2、同一个宿主机那容器无法ping通宿主机:

1 2 3 4 5 6 7 8 9 10 / # ping -c 2 192.168.56.3 PING 192.168.56.3 (192.168.56.3): 56 data bytes ^C --- 192.168.56.3 ping statistics --- 2 packets transmitted, 0 packets received, 100% packet loss / # ping -c 2 192.168.56.3 PING 192.168.56.3 (192.168.56.3): 56 data bytes --- 192.168.56.3 ping statistics --- 2 packets transmitted, 0 packets received, 100% packet loss

原因是什么:

3、同一个宿主机那容器间通信

可以看到同一个宿主机内的容器可以相互通信

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 / # ping 192.168.56.32 -c 2 PING 192.168.56.32 (192.168.56.32): 56 data bytes 64 bytes from 192.168.56.32: seq=0 ttl=64 time=0.054 ms 64 bytes from 192.168.56.32: seq=1 ttl=64 time=0.116 ms --- 192.168.56.32 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.054/0.085/0.116 ms / # ping 192.168.56.31 -c 2 PING 192.168.56.31 (192.168.56.31): 56 data bytes 64 bytes from 192.168.56.31: seq=0 ttl=64 time=0.055 ms 64 bytes from 192.168.56.31: seq=1 ttl=64 time=0.114 ms --- 192.168.56.31 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.055/0.084/0.114 ms

4、创建宿主机2

1 2 3 4 5 6 7 8 9 [root@centos-4-5 ~]# ip addr show eth0 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:1c:42:79:65:9d brd ff:ff:ff:ff:ff:ff inet 192.168.56.7/24 brd 192.168.56.255 scope global noprefixroute dynamic eth0 valid_lft 1475sec preferred_lft 1475sec inet6 fdb2:2c26:f4e4:0:21c:42ff:fe79:659d/64 scope global noprefixroute dynamic valid_lft 2591565sec preferred_lft 604365sec inet6 fe80::21c:42ff:fe79:659d/64 scope link noprefixroute valid_lft forever preferred_lft forever

5、测试主机1与主机2通信 ,完全OK

1 2 3 4 5 6 7 8 [root@centos-4-5 ~]# ping 192.168.56.3 -c 2 PING 192.168.56.3 (192.168.56.3) 56(84) bytes of data. 64 bytes from 192.168.56.3: icmp_seq=1 ttl=64 time=0.585 ms 64 bytes from 192.168.56.3: icmp_seq=2 ttl=64 time=0.521 ms --- 192.168.56.3 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1043ms rtt min/avg/max/mdev = 0.521/0.553/0.585/0.032 ms

6、测试主机2与主机1的容器通信,不可用

1 2 3 4 5 [root@centos-4-5 ~]# ping 192.168.56.31 -c 2 PING 192.168.56.31 (192.168.56.31) 56(84) bytes of data. --- 192.168.56.31 ping statistics --- 2 packets transmitted, 0 received, 100% packet loss, time 1051ms

总结 macvlan 模式的局限性较大,只能宿主机内容器之间相互可以访问,但是不能实现跨主机容器的通信

2、IpVlan 模式 ipvlan就比较强大了,可以支持跨宿主机通信,不需要任何额外的配置!

使用 1 2 3 4 [root@centos-linux ~]# docker network create -d ipvlan \ --subnet=192.168.56.0/24 \ --gateway=192.168.56.1 \ -o parent=eth0 ipvlan_net

详情

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 [root@centos-linux ~]# docker network inspect ipvlan_net [ { "Name": "ipvlan_net", "Id": "22a8b5faf9ddc2b8a7a81d67467649b331c8ccaf2a7bc7aaf25dd7344d1fed26", "Created": "2021-03-06T16:03:26.113213178+08:00", "Scope": "local", "Driver": "ipvlan", "EnableIPv6": false, "IPAM": { "Driver": "default", "Options": {}, "Config": [ { "Subnet": "192.168.56.0/24", "Gateway": "192.168.56.1" } ] }, "Internal": false, "Attachable": false, "Ingress": false, "ConfigFrom": { "Network": "" }, "ConfigOnly": false, "Containers": { "80c8bb73c329f1c0c370514c72cd25979a1b5d8aa112b49c24385d558d2d4e53": { "Name": "recursing_leakey", "EndpointID": "8055b00e4981d35b08f39565bde348142785565b3e43055fb719d9f7cef7fae5", "MacAddress": "", "IPv4Address": "192.168.56.32/24", "IPv6Address": "" }, "e1f96d16c0e5f9b04cc49b63cc991c89834ec6f0f599a64853bb6dddd26b3f51": { "Name": "confident_hodgkin", "EndpointID": "d1347ff2d6f1d8c2070d1dccad5aa29f3e3c34bc982ab026a352f071f6626dd7", "MacAddress": "", "IPv4Address": "192.168.56.31/24", "IPv6Address": "" } }, "Options": { "parent": "eth0" }, "Labels": {} } ]

1、主机1-> 容器1-2(不通) / 容器1-2 -> 主机1 (不通)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 [root@centos-linux ~]# ping 192.168.56.32 -c 2 PING 192.168.56.32 (192.168.56.32) 56(84) bytes of data. From 192.168.56.3 icmp_seq=1 Destination Host Unreachable From 192.168.56.3 icmp_seq=2 Destination Host Unreachable --- 192.168.56.32 ping statistics --- 2 packets transmitted, 0 received, +2 errors, 100% packet loss, time 1072ms pipe 2 / # ping 192.168.56.3 -c 2 PING 192.168.56.3 (192.168.56.3): 56 data bytes --- 192.168.56.3 ping statistics --- 2 packets transmitted, 0 packets received, 100% packet loss

2、容器1-2->容器1-1 (通)

1 2 3 4 5 6 7 8 / # ping 192.168.56.31 -c 2 PING 192.168.56.31 (192.168.56.31): 56 data bytes 64 bytes from 192.168.56.31: seq=0 ttl=64 time=0.099 ms 64 bytes from 192.168.56.31: seq=1 ttl=64 time=0.188 ms --- 192.168.56.31 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.099/0.143/0.188 ms

3、主机2->主机1 (通)

1 2 3 4 5 6 7 8 [root@centos-4-5 ~]# ping 192.168.56.3 -c 2 PING 192.168.56.3 (192.168.56.3) 56(84) bytes of data. 64 bytes from 192.168.56.3: icmp_seq=1 ttl=64 time=0.478 ms 64 bytes from 192.168.56.3: icmp_seq=2 ttl=64 time=0.551 ms --- 192.168.56.3 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1064ms rtt min/avg/max/mdev = 0.478/0.514/0.551/0.042 ms

4、主机2容器2-> 主机1 (通)

1 2 3 4 5 6 7 8 / # ping 192.168.56.3 -c 2 PING 192.168.56.3 (192.168.56.3): 56 data bytes 64 bytes from 192.168.56.3: seq=0 ttl=64 time=0.469 ms 64 bytes from 192.168.56.3: seq=1 ttl=64 time=0.605 ms --- 192.168.56.3 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.469/0.537/0.605 ms

5、主机2容器2-2->主机1容器1-2 (通)

1 2 3 4 5 6 7 8 / # ping 192.168.56.32 -c 2 PING 192.168.56.32 (192.168.56.32): 56 data bytes 64 bytes from 192.168.56.32: seq=0 ttl=64 time=0.569 ms 64 bytes from 192.168.56.32: seq=1 ttl=64 time=0.687 ms --- 192.168.56.32 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.569/0.628/0.687 ms

6、主机2 -> 主机1容器1-2 (通)

1 2 3 4 5 6 7 8 [root@centos-4-5 ~]# ping 192.168.56.32 -c 2 PING 192.168.56.32 (192.168.56.32) 56(84) bytes of data. 64 bytes from 192.168.56.32: icmp_seq=1 ttl=64 time=0.321 ms 64 bytes from 192.168.56.32: icmp_seq=2 ttl=64 time=0.609 ms --- 192.168.56.32 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1022ms rtt min/avg/max/mdev = 0.321/0.465/0.609/0.144 ms

总结 支持跨主机间容器通信(依赖于宿主机之间可以相互通信,其实只要在一个子网下即可) 不支持单机内的宿主机容器间的通信 3、参考文章 linux 网络虚拟化: macvlan

Docker 跨主机容器间网络通信(一)

Docker系列(十三):Docker 跨主机容器间网络通信(二)

Docker系列(十四):Docker Swarm集群

容器网络:盘点,解释与分析

Macvlan与ipvlan